Web scraping

在Colab中打开

Use case

网络研究是LLM应用程序中的一个强力工具:

- 用户已经将它标记为他最想要的人工智能工具之一。

- 像 gpt-researcher 这样的 OSS 仓库正变得越来越受欢迎。

Overview

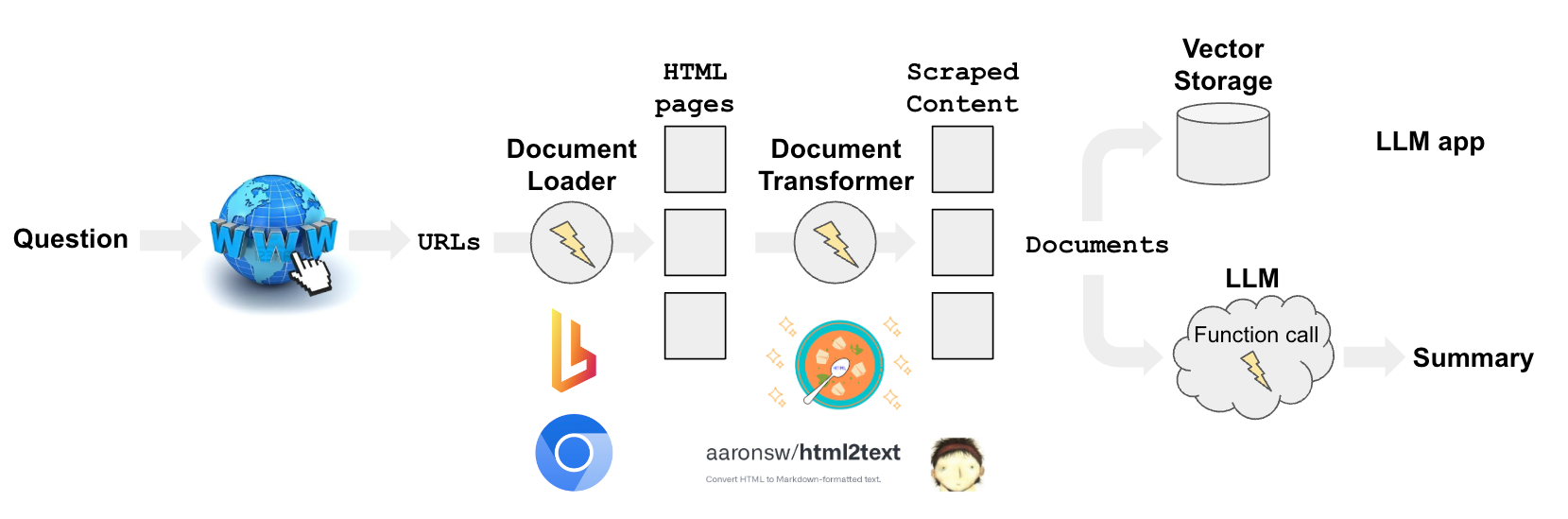

从网页获取内容有几个组成部分:

- 搜索:查询到URL(例如使用GoogleSearchAPIWrapper)。

- 加载中 : 将 URL 转换为 HTML(例如,使用 AsyncHtmlLoader、AsyncChromiumLoader 等)。

- 将HTML转换为格式化文本(例如使用HTML2Text或Beautiful Soup)。

Quickstart

pip install -q langchain-openai langchain playwright beautifulsoup4

playwright install

# Set env var OPENAI_API_KEY or load from a .env file:

# import dotenv

# dotenv.load_dotenv()

使用 Chromium 无头实例来抓取 HTML 内容。

- 爬取过程的异步特性是使用Python的asyncio库来处理的。

- 实际与网页的交互由 Playwright 处理。

from langchain_community.document_loaders import AsyncChromiumLoader

from langchain_community.document_transformers import BeautifulSoupTransformer

# Load HTML

loader = AsyncChromiumLoader(["https://www.wsj.com"])

html = loader.load()

提取HTML内容中的文本内容标签,比如<p>、<li>、<div>和<a>标签。

- 段落标签。在HTML中定义一个段落,用于将相关的句子和/或短语组合在一起。

- <li> <li标签。在有序列表(<ol>)和无序列表(<ul>)中使用,用于定义列表中的各个项目。

- “DIVISION” 标签。 它是一个块级元素,用于组合其他内联或块级元素。

-

锚标签。用于定义超链接。

-

<span>:用于标记文本部分或文档部分的内联容器。

许多新闻网站(例如,WSJ,CNN)的头条新闻和摘要都被包裹在 <span> 标签中。

# Transform

bs_transformer = BeautifulSoupTransformer()

docs_transformed = bs_transformer.transform_documents(html, tags_to_extract=["span"])

# Result

docs_transformed[0].page_content[0:500]

'English EditionEnglish中文 (Chinese)日本語 (Japanese) More Other Products from WSJBuy Side from WSJWSJ ShopWSJ Wine Other Products from WSJ Search Quotes and Companies Search Quotes and Companies 0.15% 0.03% 0.12% -0.42% 4.102% -0.69% -0.25% -0.15% -1.82% 0.24% 0.19% -1.10% About Evan His Family Reflects His Reporting How You Can Help Write a Message Life in Detention Latest News Get Email Updates Four Americans Released From Iranian Prison The Americans will remain under house arrest until they are '

这些文件现在被安排用于各种LLM应用程序的下游使用,具体内容如下讨论。

Loader

AsyncHtmlLoader

AsyncHtmlLoader使用aiohttp库进行异步HTTP请求,适用于简单轻量级的网络爬虫。

AsyncChromiumLoader

AsyncChromiumLoader 使用 Playwright 来启动 Chromium 实例,能够处理 JavaScript 渲染和更复杂的网页交互。

Chromium 是 Playwright 支持的浏览器之一,Playwright 是一个用于控制浏览器自动化的库。

无头模式意味着浏览器在没有图形用户界面的情况下运行,通常用于网络爬虫。

from langchain_community.document_loaders import AsyncHtmlLoader

urls = ["https://www.espn.com", "https://lilianweng.github.io/posts/2023-06-23-agent/"]

loader = AsyncHtmlLoader(urls)

docs = loader.load()

Transformer

HTML2Text

HTML2Text提供了将HTML内容直接转换为纯文本(带有类似markdown的格式),而无需进行任何特定标签操作。

它最适用于那些旨在提取可读文本而不需要操作特定HTML元素的情景。

Beautiful Soup

Beautiful Soup 提供更精细的控制HTML内容,支持特定标签的提取、移除和内容清理。

适用于您想要提取特定信息并根据需要清理 HTML 内容的情况。

from langchain_community.document_loaders import AsyncHtmlLoader

urls = ["https://www.espn.com", "https://lilianweng.github.io/posts/2023-06-23-agent/"]

loader = AsyncHtmlLoader(urls)

docs = loader.load()

Fetching pages: 100%|#############################################################################################################| 2/2 [00:00<00:00, 7.01it/s]

from langchain_community.document_transformers import Html2TextTransformer

html2text = Html2TextTransformer()

docs_transformed = html2text.transform_documents(docs)

docs_transformed[0].page_content[0:500]

"Skip to main content Skip to navigation\n\n<\n\n>\n\nMenu\n\n## ESPN\n\n * Search\n\n * * scores\n\n * NFL\n * MLB\n * NBA\n * NHL\n * Soccer\n * NCAAF\n * …\n\n * Women's World Cup\n * LLWS\n * NCAAM\n * NCAAW\n * Sports Betting\n * Boxing\n * CFL\n * NCAA\n * Cricket\n * F1\n * Golf\n * Horse\n * MMA\n * NASCAR\n * NBA G League\n * Olympic Sports\n * PLL\n * Racing\n * RN BB\n * RN FB\n * Rugby\n * Tennis\n * WNBA\n * WWE\n * X Games\n * XFL\n\n * More"

Scraping with extraction

LLM with function calling

网页抓取出于多种原因而具有挑战性。

其中一个原因是现代网站布局和内容的不断变化,需要修改抓取脚本以适应这些变化。

使用函数(例如,OpenAI)与提取链,我们避免在网站更改时不断更改您的代码。

我们正在使用 gpt-3.5-turbo-0613 来确保访问 OpenAI Functions 功能(尽管在撰写时可能已经对所有人开放)。

我们还将温度保持在0度,以保持LLM的随机性。

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613")

Define a schema

接下来,您定义一个模式来指定您想要提取的数据类型。

这里,关键名称很重要,因为它们告诉LLM他们想要哪种信息。

那么,请尽可能详细。

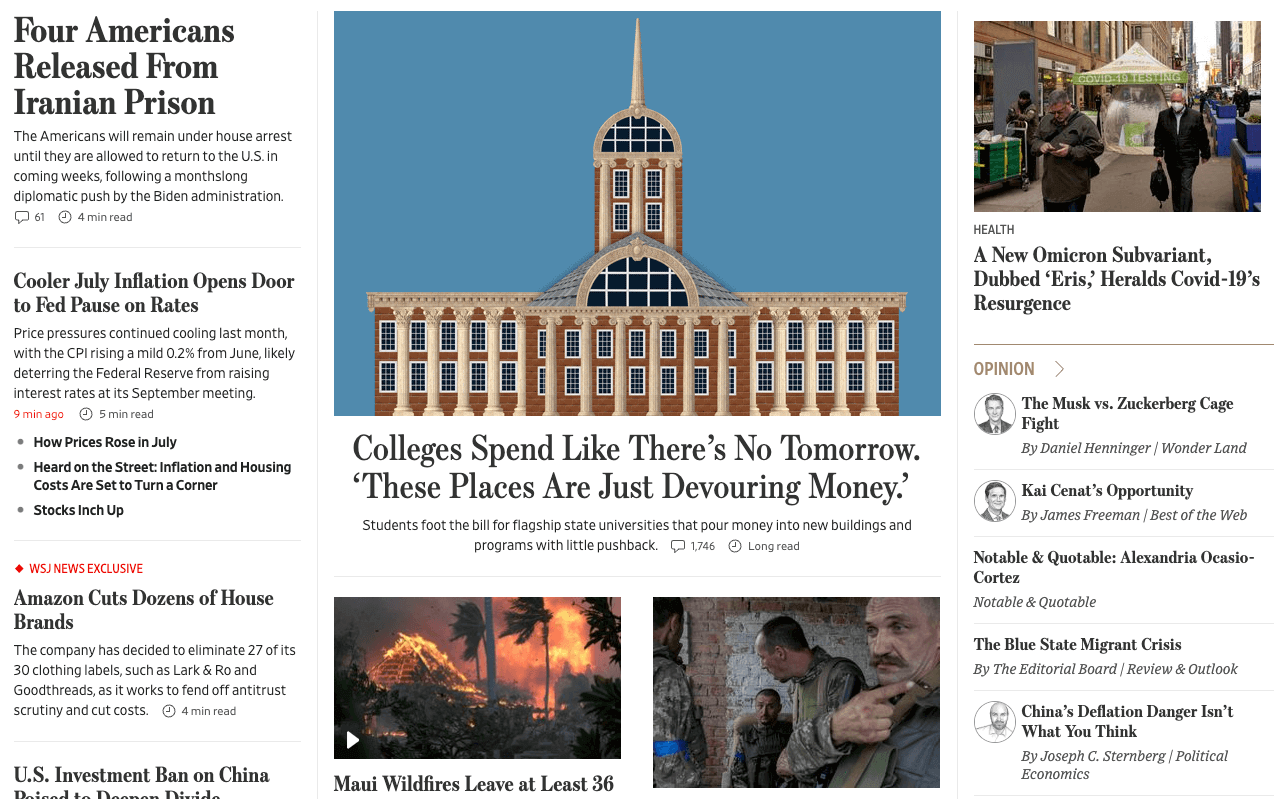

在这个示例中,我们想要从《华尔街日报》网站上抓取新闻文章的标题和摘要。

from langchain.chains import create_extraction_chain

schema = {

"properties": {

"news_article_title": {"type": "string"},

"news_article_summary": {"type": "string"},

},

"required": ["news_article_title", "news_article_summary"],

}

def extract(content: str, schema: dict):

return create_extraction_chain(schema=schema, llm=llm).run(content)

Run the web scraper w/ BeautifulSoup

如上所示,我们将使用BeautifulSoupTransformer。

import pprint

from langchain_text_splitters import RecursiveCharacterTextSplitter

def scrape_with_playwright(urls, schema):

loader = AsyncChromiumLoader(urls)

docs = loader.load()

bs_transformer = BeautifulSoupTransformer()

docs_transformed = bs_transformer.transform_documents(

docs, tags_to_extract=["span"]

)

print("Extracting content with LLM")

# Grab the first 1000 tokens of the site

splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=1000, chunk_overlap=0

)

splits = splitter.split_documents(docs_transformed)

# Process the first split

extracted_content = extract(schema=schema, content=splits[0].page_content)

pprint.pprint(extracted_content)

return extracted_content

urls = ["https://www.wsj.com"]

extracted_content = scrape_with_playwright(urls, schema=schema)

Extracting content with LLM

[{'news_article_summary': 'The Americans will remain under house arrest until '

'they are allowed to return to the U.S. in coming '

'weeks, following a monthslong diplomatic push by '

'the Biden administration.',

'news_article_title': 'Four Americans Released From Iranian Prison'},

{'news_article_summary': 'Price pressures continued cooling last month, with '

'the CPI rising a mild 0.2% from June, likely '

'deterring the Federal Reserve from raising interest '

'rates at its September meeting.',

'news_article_title': 'Cooler July Inflation Opens Door to Fed Pause on '

'Rates'},

{'news_article_summary': 'The company has decided to eliminate 27 of its 30 '

'clothing labels, such as Lark & Ro and Goodthreads, '

'as it works to fend off antitrust scrutiny and cut '

'costs.',

'news_article_title': 'Amazon Cuts Dozens of House Brands'},

{'news_article_summary': 'President Biden’s order comes on top of a slowing '

'Chinese economy, Covid lockdowns and rising '

'tensions between the two powers.',

'news_article_title': 'U.S. Investment Ban on China Poised to Deepen Divide'},

{'news_article_summary': 'The proposed trial date in the '

'election-interference case comes on the same day as '

'the former president’s not guilty plea on '

'additional Mar-a-Lago charges.',

'news_article_title': 'Trump Should Be Tried in January, Prosecutors Tell '

'Judge'},

{'news_article_summary': 'The CEO who started in June says the platform has '

'“an entirely different road map” for the future.',

'news_article_title': 'Yaccarino Says X Is Watching Threads but Has Its Own '

'Vision'},

{'news_article_summary': 'Students foot the bill for flagship state '

'universities that pour money into new buildings and '

'programs with little pushback.',

'news_article_title': 'Colleges Spend Like There’s No Tomorrow. ‘These '

'Places Are Just Devouring Money.’'},

{'news_article_summary': 'Wildfires fanned by hurricane winds have torn '

'through parts of the Hawaiian island, devastating '

'the popular tourist town of Lahaina.',

'news_article_title': 'Maui Wildfires Leave at Least 36 Dead'},

{'news_article_summary': 'After its large armored push stalled, Kyiv has '

'fallen back on the kind of tactics that brought it '

'success earlier in the war.',

'news_article_title': 'Ukraine Uses Small-Unit Tactics to Retake Captured '

'Territory'},

{'news_article_summary': 'President Guillermo Lasso says the Aug. 20 election '

'will proceed, as the Andean country grapples with '

'rising drug gang violence.',

'news_article_title': 'Ecuador Declares State of Emergency After '

'Presidential Hopeful Killed'},

{'news_article_summary': 'This year’s hurricane season, which typically runs '

'from June to the end of November, has been '

'difficult to predict, climate scientists said.',

'news_article_title': 'Atlantic Hurricane Season Prediction Increased to '

'‘Above Normal,’ NOAA Says'},

{'news_article_summary': 'The NFL is raising the price of its NFL+ streaming '

'packages as it adds the NFL Network and RedZone.',

'news_article_title': 'NFL to Raise Price of NFL+ Streaming Packages as It '

'Adds NFL Network, RedZone'},

{'news_article_summary': 'Russia is planning a moon mission as part of the '

'new space race.',

'news_article_title': 'Russia’s Moon Mission and the New Space Race'},

{'news_article_summary': 'Tapestry’s $8.5 billion acquisition of Capri would '

'create a conglomerate with more than $12 billion in '

'annual sales, but it would still lack the '

'high-wattage labels and diversity that have fueled '

'LVMH’s success.',

'news_article_title': "Why the Coach and Kors Marriage Doesn't Scare LVMH"},

{'news_article_summary': 'The Supreme Court has blocked Purdue Pharma’s $6 '

'billion Sackler opioid settlement.',

'news_article_title': 'Supreme Court Blocks Purdue Pharma’s $6 Billion '

'Sackler Opioid Settlement'},

{'news_article_summary': 'The Social Security COLA is expected to rise in '

'2024, but not by a lot.',

'news_article_title': 'Social Security COLA Expected to Rise in 2024, but '

'Not by a Lot'}]

我们可以将抓取到的标题与页面进行比较:

观察LangSmith跟踪器,我们可以看到引擎盖下面发生了什么:

- 这是根据提取过程中所解释的内容。

- 我们在输入文本上调用信息提取功能。

- 它将尝试从提供的网址内容中填充架构。

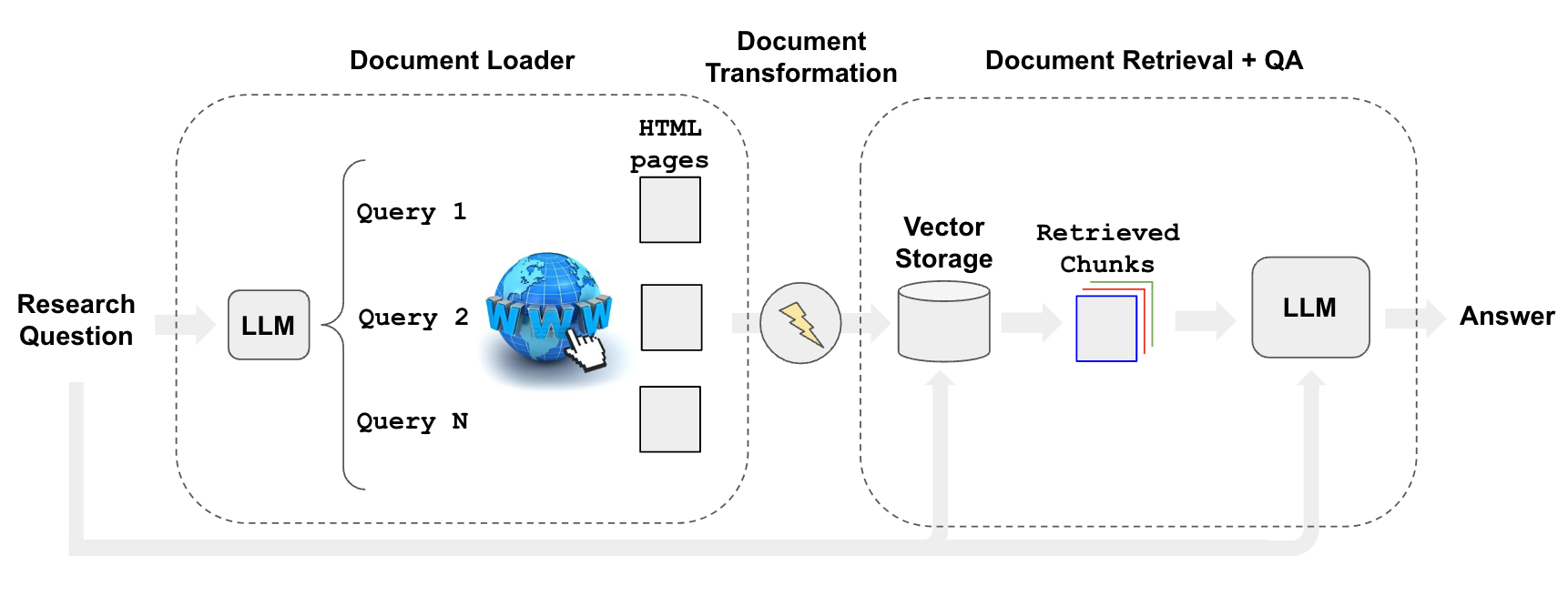

Research automation

与网页抓取相关,我们可能希望利用搜索到的内容来回答特定问题。

我们可以使用WebResearchRetriever等检索器自动化进行网络研究的过程。

复制要求: 从这里:

使用以下命令安装所有依赖包:pip install -r requirements.txt

请设置 GOOGLE_CSE_ID 和 GOOGLE_API_KEY。

from langchain.retrievers.web_research import WebResearchRetriever

from langchain_community.utilities import GoogleSearchAPIWrapper

from langchain_community.vectorstores import Chroma

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

# Vectorstore

vectorstore = Chroma(

embedding_function=OpenAIEmbeddings(), persist_directory="./chroma_db_oai"

)

# LLM

llm = ChatOpenAI(temperature=0)

# Search

search = GoogleSearchAPIWrapper()

使用上述工具初始化检索器。

- 使用LLM生成多个相关的搜索查询(一个LLM调用)

- 执行每个查询的搜索

- 选择每个查询的前K个链接(同时进行多个搜索调用)

- 加载所有选定链接中的信息(并行抓取页面)

- 将这些文档索引到向量存储中。

- 找到每个原始生成的搜索查询中最相关的文档

# Initialize

web_research_retriever = WebResearchRetriever.from_llm(

vectorstore=vectorstore, llm=llm, search=search

)

# Run

import logging

logging.basicConfig()

logging.getLogger("langchain.retrievers.web_research").setLevel(logging.INFO)

from langchain.chains import RetrievalQAWithSourcesChain

user_input = "How do LLM Powered Autonomous Agents work?"

qa_chain = RetrievalQAWithSourcesChain.from_chain_type(

llm, retriever=web_research_retriever

)

result = qa_chain({"question": user_input})

result

INFO:langchain.retrievers.web_research:Generating questions for Google Search ...

INFO:langchain.retrievers.web_research:Questions for Google Search (raw): {'question': 'How do LLM Powered Autonomous Agents work?', 'text': LineList(lines=['1. What is the functioning principle of LLM Powered Autonomous Agents?\n', '2. How do LLM Powered Autonomous Agents operate?\n'])}

INFO:langchain.retrievers.web_research:Questions for Google Search: ['1. What is the functioning principle of LLM Powered Autonomous Agents?\n', '2. How do LLM Powered Autonomous Agents operate?\n']

INFO:langchain.retrievers.web_research:Searching for relevant urls ...

INFO:langchain.retrievers.web_research:Searching for relevant urls ...

INFO:langchain.retrievers.web_research:Search results: [{'title': 'LLM Powered Autonomous Agents | Hacker News', 'link': 'https://news.ycombinator.com/item?id=36488871', 'snippet': 'Jun 26, 2023 ... Exactly. A temperature of 0 means you always pick the highest probability token (i.e. the "max" function), while a temperature of 1 means you\xa0...'}]

INFO:langchain.retrievers.web_research:Searching for relevant urls ...

INFO:langchain.retrievers.web_research:Search results: [{'title': "LLM Powered Autonomous Agents | Lil'Log", 'link': 'https://lilianweng.github.io/posts/2023-06-23-agent/', 'snippet': 'Jun 23, 2023 ... Task decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\\n1." , "What are the subgoals for achieving XYZ?" , (2) by\xa0...'}]

INFO:langchain.retrievers.web_research:New URLs to load: []

INFO:langchain.retrievers.web_research:Grabbing most relevant splits from urls...

{'question': 'How do LLM Powered Autonomous Agents work?',

'answer': "LLM-powered autonomous agents work by using LLM as the agent's brain, complemented by several key components such as planning, memory, and tool use. In terms of planning, the agent breaks down large tasks into smaller subgoals and can reflect and refine its actions based on past experiences. Memory is divided into short-term memory, which is used for in-context learning, and long-term memory, which allows the agent to retain and recall information over extended periods. Tool use involves the agent calling external APIs for additional information. These agents have been used in various applications, including scientific discovery and generative agents simulation.",

'sources': ''}

Going deeper

- 这里有一个应用程序,它使用轻量级用户界面包装了这个检索器。

Question answering over a website

使用Apify的网站内容爬虫(Website Content Crawler Actor),您可以回答关于特定网站的问题,该爬虫可以深度抓取文档、知识库、帮助中心或博客等网站,并从网页中提取文本内容。

在下面的示例中,我们将深入爬取LangChain的Chat LLM模型的Python文档,并对其进行提问。

首先,安装必要的依赖项。 pip install apify-client langchain-openai langchain

请在您的环境变量中设置OPENAI_API_KEY和APIFY_API_TOKEN。

完整代码如下:

from langchain.docstore.document import Document

from langchain.indexes import VectorstoreIndexCreator

from langchain_community.utilities import ApifyWrapper

apify = ApifyWrapper()

# Call the Actor to obtain text from the crawled webpages

loader = apify.call_actor(

actor_id="apify/website-content-crawler",

run_input={"startUrls": [{"url": "/docs/integrations/chat/"}]},

dataset_mapping_function=lambda item: Document(

page_content=item["text"] or "", metadata={"source": item["url"]}

),

)

# Create a vector store based on the crawled data

index = VectorstoreIndexCreator().from_loaders([loader])

# Query the vector store

query = "Are any OpenAI chat models integrated in LangChain?"

result = index.query(query)

print(result)

Yes, LangChain offers integration with OpenAI chat models. You can use the ChatOpenAI class to interact with OpenAI models.